This article explains how businesses can use AI Expert Systems, built atop of Large Language Models to automate and streamline their workflows.

The term “Expertise without the Expense” refers to the idea that businesses can leverage AI Expert Systems to analyze and interpret complex data and provide accurate insights and recommendations. The article discusses how AI Expert Systems are trained on various types of documents and content and how the preprocessing treatment of the documents is clustered together based on similarities in their structure and content.

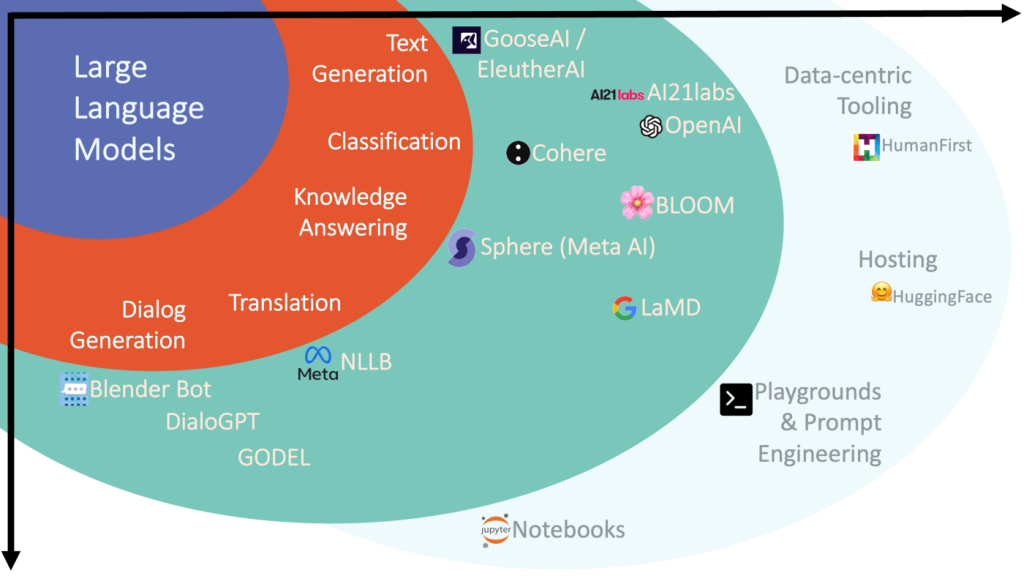

Today we will focus on Q&A Large Language Models (LLMs), explaining how they can be used in your business.

Q&A Large Language Models (LLMs) are computer systems that use natural language processing (NLP) and machine learning (ML) techniques to automatically answer questions asked by humans. LLMs are created and fine-tuned using vast amounts of data and are capable of answering a wide range of questions related to a specific domain.

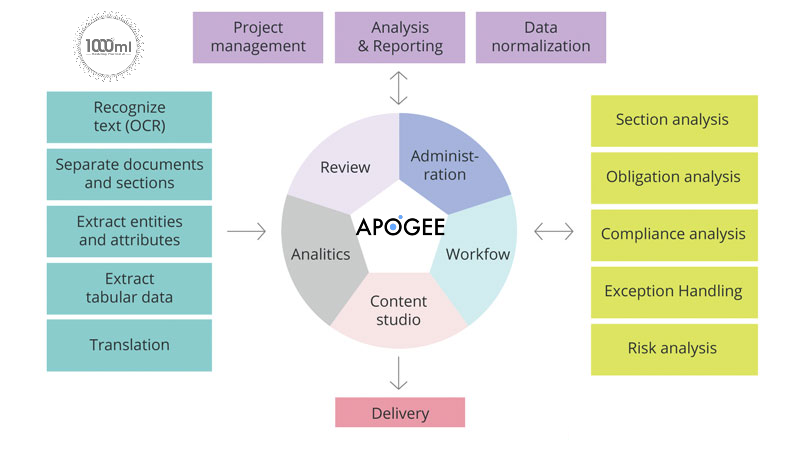

Apogee Suite of NLP and AI tools made by 1000ml has helped Small and Medium Businesses in several industries, large Enterprises and Government Ministries gain an understanding of the Intelligence that exists within their documents, contracts, and generally, any content.

Our toolset – Apogee, Zenith and Mensa work together to allow for:

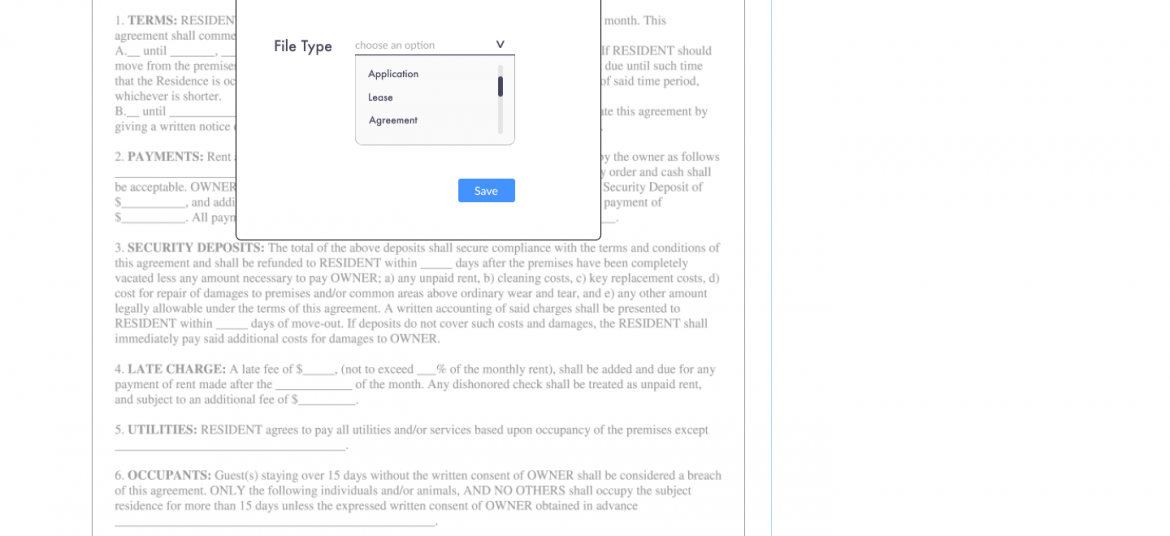

- Any document, contract and/or content ingested and understood

- Document (Type) Classification

- Content Summarization

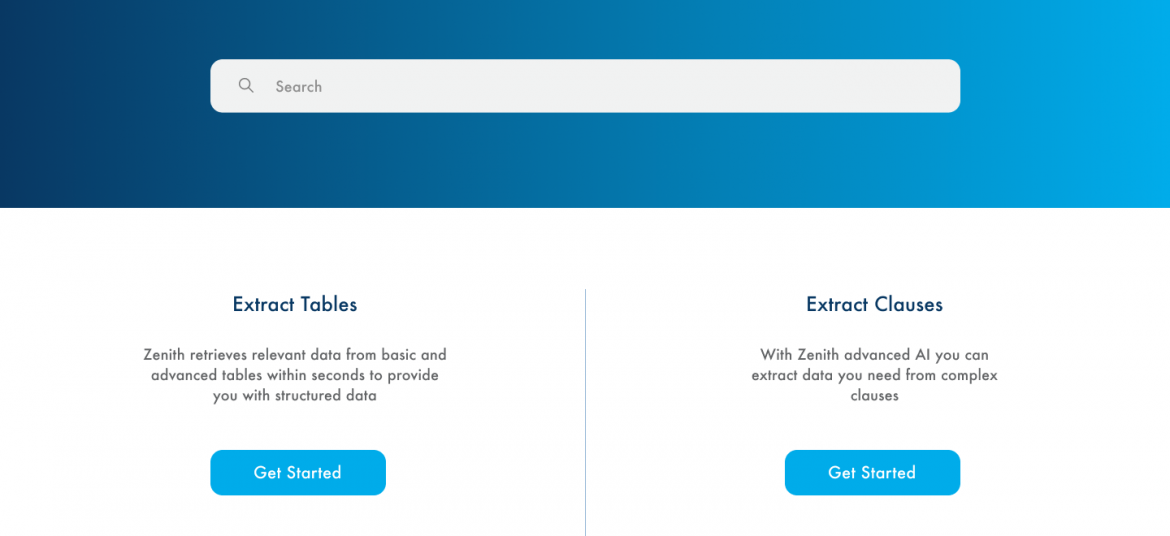

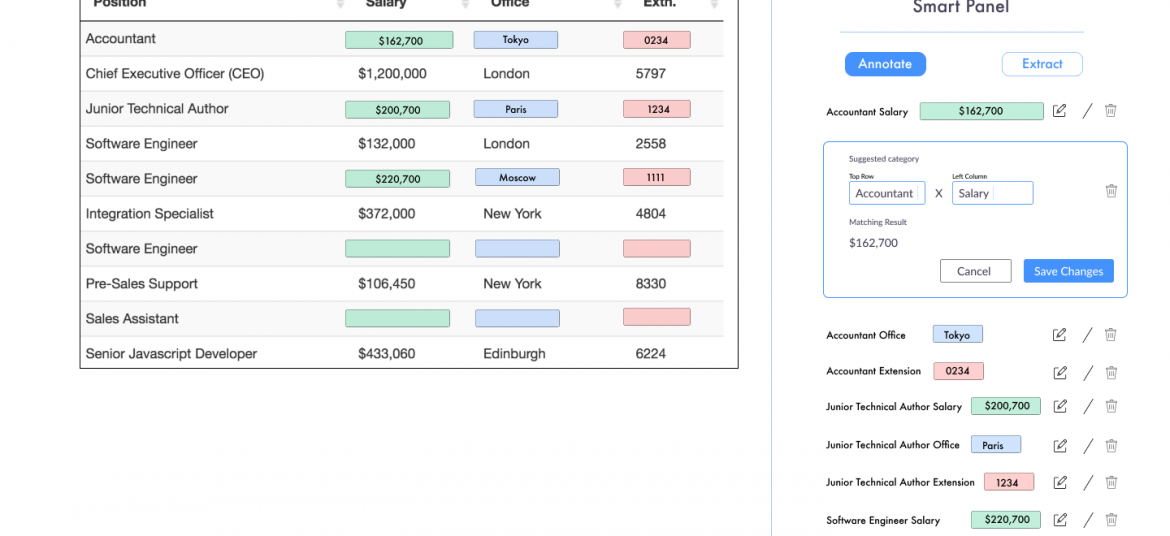

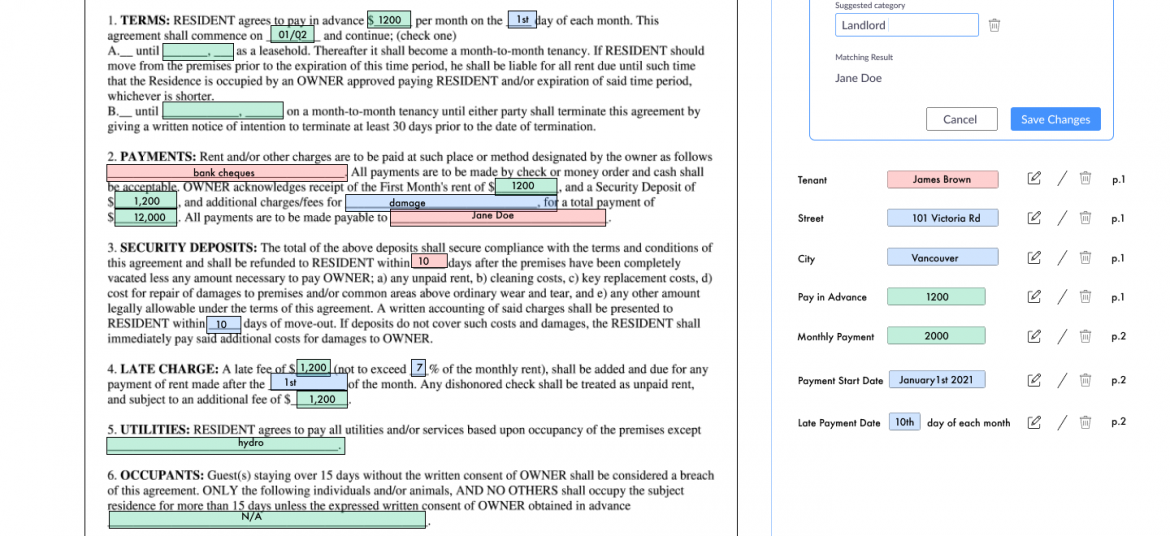

- Metadata (or text) Extraction

- Table (and embedded text) Extraction

- Conversational AI (chatbot)

Search, Javascript SDK and API

- Document Intelligence

- Intelligent Document Processing

- ERP NLP Data Augmentation

- Judicial Case Prediction Engine

- Digital Navigation AI

- No-configuration FAQ Bots

- and many more

Check out our next webinar dates below to find out how 1000ml’s tool works with your organization’s systems to create opportunities for Robotic Process Automation (RPA) and automatic, self-learning data pipelines.

Comments are closed.