The excitements ae understandable as the technology can offer a competitive edge, increase efficiency, generate cost reductions, and enable the creation of new products and services. However, AI is a complex technology and many companies remain anxious about some the challenges of its adoption, such as data bias and discrimination, data integrity, and job cuts, just to mention a few. Moreover, deployment of AI can impact many business functions and disrupt existing operational and IT processes. Often, companies’ natural response to this is to stay cautious about purchasing AI solutions. The risk perceptions in the mind of those making these build or buy AI decisions therefore matter a great deal. In this article, we would like share some of our observations of such risks from our work in the financial service industry.

Who is responsible if the technology does not work?

Companies, particularly the large ones, tend to have their own in-house innovation teams charged with assessing new technologies and making recommendations about their viability and fit with the business. There are many aspects that these teams will consider when assessing an AI solution. Perhaps contrary to the conventional wisdom, financial services companies do not really need to be concerned with various oft-mentioned risks such as those related to bias and discrimination. Why? They tend to use AI solutions to automate non-customer-facing activities such as document processing. Instead, they are more likely to focus on the technological issues. A consequence is that having an advocate for the technology who will take full responsibility for the purchase can be difficult.

So much of the successful adoption of an AI solution goes beyond the pure technical merits of the solution, and requires its integration within existing IT and operational processes: will the AI model work once live data is fed into the system? Will the IT infrastructure be able to handle the AI solution? Will the in-house engineering and data science teams be able to manage and maintain the technology in the long run? There is always the risk that the AI technology does not perform as intended or expected. The uncertainties around the technology success within the company are too high and they may be unwilling to bear the full weight of sponsoring the technology and therefore risking their own job should its deployment fail for reasons outside their control.

Are you sure the technology would work as intended?

Business leaders are the ones who ultimately make capital expenditure decisions. In many organisations, especially those under public scrutiny or in the public sector, getting the technology right the first time is a essential. Failure to get the technology to deliver what was promised can be very damaging to the business leaders, who can be seen as incompetent, or worse, as having misused public funds, potentially leading to reputational or even legal damages.

With such a technically complex solution as AI, the leadership’s decision depends heavily on the information provided to them by their technical teams, and particularly data scientists. They must be knowledgeable of the new solution and able to communicate its benefits and challenges clearly to non-technically versed business leaders. Vendors have an essential role to play here to ensure that the data scientists understand the technology fully and have detailed information about its business case.

As far as purchasing AI is concerned, issues such as talent shortage to implement and support the technology, budget considerations, the integration with the existing IT infrastructure and a viable business case are likely to be top of mind. However, there remain many uncertainties in the longer run for the data and leadership teams such as whether the technology vendor will still exist in five years’ time to provide the necessary technical support, how scalable the proposed solution is given the state and nature of the existing IT system, who is going to maintain the onboard technology going forward and what this maintenance involves. Looking from the vantage point, unless the decision-makers can somehow be 110% confident and comfortable with the technology about to be introduced, there always exists the temptation of dropping the AI project altogether. In short, no gain, no pain.

Past research and studies have always warned us against the different risks related to AI technologies. Yet in the context of business, it is often not the technology itself but rather the uncertainties surrounding the adoption of the technology that matters. The perceived risks created by these uncertainties can be very real – realistic enough to discourage the uptake of AI for a company. Find ways to mitigate these risks should be a priority of any business wishing to use AI to create value for itself.

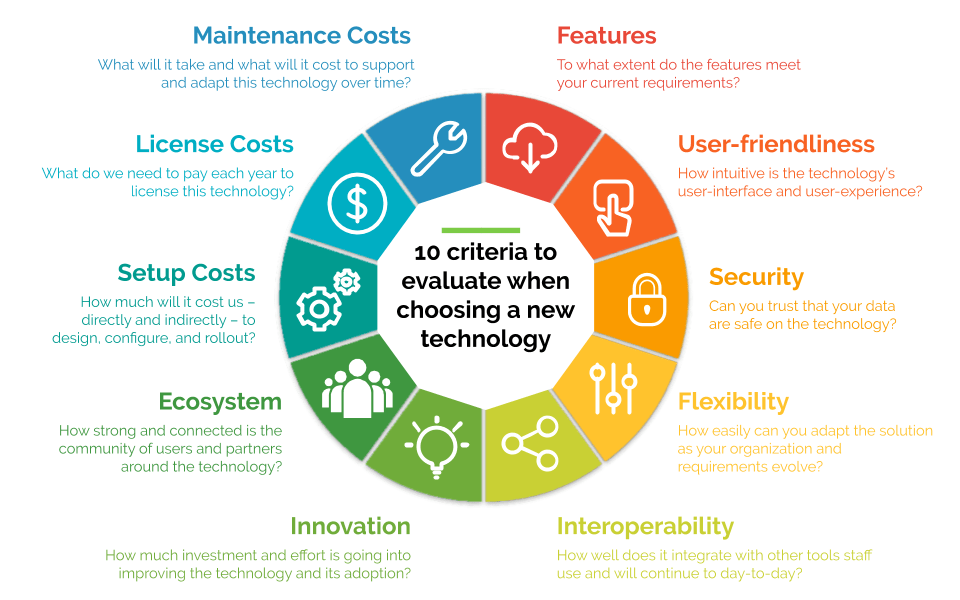

The 10 Consideration to Decide on new Technologies

To what extent will this technology meet our needs today?

1. Features: Does the technology provide out-of-the-box features that meet your requirements? If not, can the gaps be filled through configuration?

List out all the requirements you can currently imagine addressing with the new technology, grouping ones that are related and categorizing into buckets like Automation, Analytics, Mobile, etc. Then identify each requirement as ‘must-have’, ‘should-have’, or ‘could-have’, making sure it’s described specifically enough (e.g. “Automatically send email reminders when reports are overdue” or “Display data in a point map”). Then get vendors to honestly score their technologies as follows: 3 points if the feature is provided out-of-the-box, 2 points if the feature can be configured without code, 1 point if the feature can be delivered through custom development, 0 points if the requirement cannot be met. You don’t have to have a hundred-million-dollar annual budget or be running a 6-month competitive RFP to do this exercise.

2.User-friendliness: How intuitive and easy-to-understand is the technology’s user-interface and user-experience?

You might have strong opinions yourself, but end-users are best positioned to evaluate this criterion. It’s important to look at user-friendliness through each lens of the technology. Often we see user-friendliness evaluated only from the perspective of end-users doing data collection or capture – that’s a crucial lens, but the technology’s friendliness for data management, data analysis, data visualization may be equally important.

3. Security: Can you trust that your data are safe on the technology?

This criterion is not simply about ticking a box that the technology provider has a white paper about GDPR or ISO 27001 – when it comes to data protection, GDPR compliance has as much to do with your practices as with the technology you use. Rather, think about security through two lenses: internal and external.

For internal security, evaluate how well you’ll be able to control users’ access and permissions with the technology, ensuring the right staff are able to see and do the right things. Look for technologies that allow you control access and permissions at object/table-level, at feature-level, and at field-level. You’ll also want an audit trail that helps you track who made which changes and when (both for data and metadata). For external security, you want to ensure your system will be safe and that only authenticated users will have access. You might want features like: single-sign-on, two-factor-authentication, and the ability to insist on password requirements. And assuming you’re storing data on the cloud, you’ll certainly want a technology provider with a strong reputation for how it manages its servers both physically and digitally.

To what extent will the technology meet our future needs?

4.Flexibility: How easily can the solution evolve as your organization and your requirements evolve? Try to picture 5 years from now – is the technology propelling new ideas and ways of working or is it struggling to keep up as the organization changes and matures?

To assess a technology’s flexibility, you’ll need to understand, for instance:

– How configurable are its automation features? Do business rules need to be hard-coded or can they be managed through modular, adaptable automation tools.

– How configurable are its analytics features? Do reports and dashboards require customization or can they be adapted in a drag-and-drop way by end-users?

– How easy or hard is it to adapt or extend the underlying data model? Over time, you will certainly need to capture new data points, modify picklist values, inactivate certain fields, introduce entire new data tables – do you need to rely on developers to make these sorts of commonplace changes or will you be able to make them in-house?

– Can your organization extend the technology to handle major new requirements and new entire use cases that may come up in the next 5 years?

5.Interoperability: How well does the technology ‘speak’ to other technologies your organization uses? How easily can data flow from this tool to other tools or vice-versa? How nicely does it play with other tools staff use and will continue to day-to-day (e.g. for email, documents, analytics, etc)?

If you think about a solution you are still thrilled with five years from now and work backwards, one of the most important characteristics to optimize for is interoperability, or how easily the tool can integrate with other tools. On an interoperability scale from 1-10, a ‘1’ would be a tool on a custom, proprietary stack with no documented API and few examples of successful integration to date, while a ‘10’ would be a technology with an extensively documented set of APIs, a wide range of plug-and-play integrations, and myriad published examples of successful integrations including with tools your organization uses. Investing in a middleware can help speed up integration efforts and reduce the cost of maintaining integrations over time.

6.Innovation: How much investment and effort is going into improving the technology and its adoption? How many releases does the technology have per year and how helpful are the new releases?

The best SaaS companies invest heavily in R&D, ensuring their products stay ahead of the game when it comes to features, user-friendliness, security, flexibility, and interoperability. In answering the question of meeting future needs, don’t just consider what a tool or technology offers today, extrapolate from its innovation track-record to anticipate what it will offer in the future. To evaluate innovation, you’ll want to know how many releases per year to expect, what the current high-level roadmap looks like, how the roadmap is prioritized, and what kind of influence you’ll have (as a customer) on the roadmap.

7.Ecosystem: How strong and connected is the community of users and partners around the technology? How widely available is information that will help you troubleshoot or improve your implementation of the technology?

Perhaps the most underrated criterion to evaluate is the ecosystem of customers, partners, and knowledge surrounding a technology. On one side of the spectrum, you may have a brand new tool with shiny features but only a few customers, a few developers who can adapt it, and a few online resources. On the other side of the spectrum, you have platform solutions, like Salesforce, with a huge and well-connected online community providing help and training, hundreds of partners globally, 150,000+ customers, and thousands of hours of free, gamified online learning modules on Trailhead.

To what extent does the technology work within your budget?

8.Setup Costs: How much will it cost us–directly and indirectly–to design, configure, and rollout this tool?

Firstly, work out what upfront investment will be needed to get the tool up and running. A familiar tool that requires little or no configuration (e.g. Google Docs or Box.com) will require much less investment than a tool that requires months of complex setup (e.g. a CRM or M&E system). Setup costs not only include consulting services required to tailor and train, but also internal staff time required to help design, test, and adopt the tool. Setup costs will vary from provider to provider and will depend on whether you’re using a fixed-price or time-and-materials model. Unless your organization has developed firm, mature requirements or you need a very simple, straight-forward solution, a capped and carefully managed time-and-materials contract with a partner you trust is usually the most reliable model for ensuring a win-win project.

9.License Costs: What do we need to pay each year to license this technology?

While many great tools offer a ‘freemium’ model, most technology that is worth using is licensed. While many in the nonprofit sector have been taught to believe that open-source is always better, the costs of relying on open-source tools often outweigh the benefits. The advantages of licensing software are similar to the advantages of renting an apartment instead of crashing on your friends’ couch. When it comes to Salesforce, most nonprofits are eligible for 10 free licenses, but remember that Salesforce is only ‘free’ like a free puppy is free.

10. Maintenance Costs: What will it take and what will it cost to support this technology?

The final and perhaps most important component of answering the budget question revolves around the cost of maintaining and supporting the technology once it has been launched. “How is maintenance the most important cost component?” you ask.

A great answer to this; breaking out examples of the corrective, adaptive, preventive, and perfective maintenance needs that come up and citing five articles that suggest maintenance costs tend to make up 40-80% of TCO.

The more customized your solution is, the higher your maintenance costs will be. Direct costs here may include:

– Staff time from System Administrators (anywhere from 20% to 300% full-time-equivalent, depending on the system’s size and complexity) spent managing and adapting the system

– Support services from the technology provider or implementation partner to troubleshoot, debug, or make minor adaptations (often provided on a retainer basis or as part of a license fee)

– Additional services required to adapt the technology to meet your evolving requirements (mitigated by choosing a technology with excellent flexibility and a strong ecosystem)

– Buying and maintaining servers or any other hardware required to use the technology

– Time or services needed when retiring the software (exporting data, keeping the system running while a replacement is getting rolled out)

Indirect costs, meanwhile, may include:

– The cost of staff frustration, burnout, or attrition resulting from using the unfriendly, inflexible, non-interoperable technology you chose (though it may not show up on the budget, there is a very real cost to your M&E Officers ripping their hair out)

– The inefficiencies and time wasted by relying on manual data manipulation and having easy answers to common questions at your fingertips