You’ve got to look at not only how you’re developing the project but also how well people see how it can work.

You’re not the only one who might worry about an AI project failing, as it’s easy for any project to struggle. There are many reasons why an internal AI project may not work as well as you hope, with many of these problems coming from how you manage the task and get it ready.

General Bias In the Work

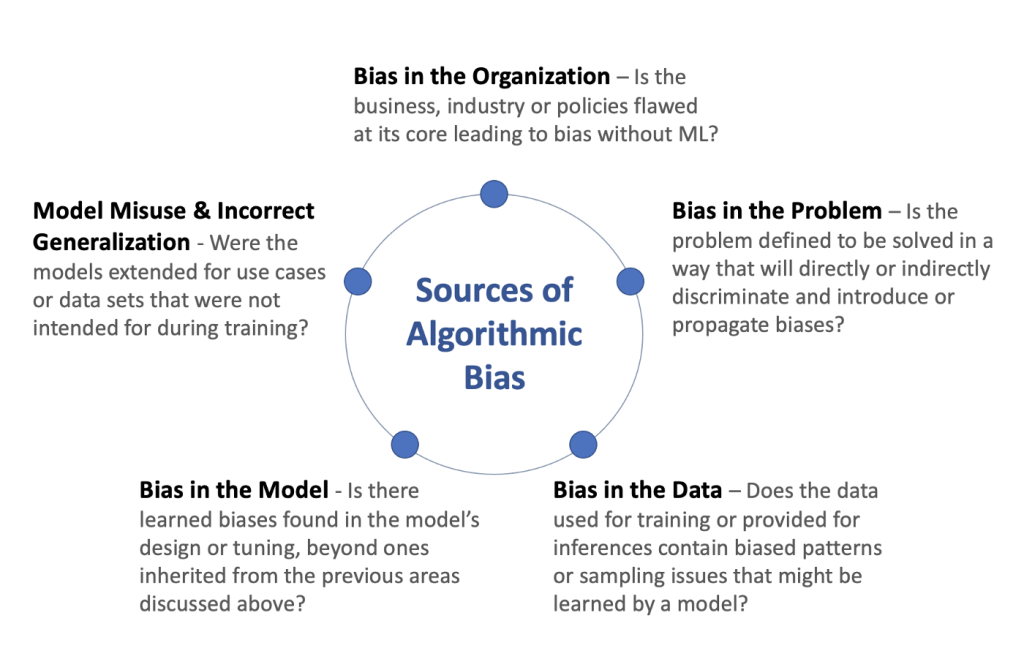

Biases can be a problem that can cause many internal AI tasks to fail. Algorithmic bias is a common problem in AI projects. The bias entails systematic errors in a system that create questionable or heavily skewed results that go in one direction. Sometimes the results come from the person who develops the AI, as the person might favor one set of data over another. But even the data itself might be a problem that can be hard to calculate or review.

For example, an AI system might put more weight on one aspect of data than on something else. The AI may also ignore certain outputs or factors that go into what causes data to appear in some form. By not understanding how the data works, it becomes easier for the AI project to fail because it won’t recognize what it needs and how it should value things.

An Inability to Manage Data Well

A business might not know how to manage the data it collects in a project. The content the AI collects must be ready for it to read:

• The data requires cleaning to make all its features as easy to read as possible.

• Labels can help the AI identify specific pieces of content, including definitions of what the AI should review and find in work.

• Multiple variables may work for different tasks. An AI program could include a look at the context of what it receives and value data differently based on what it reads.

A lack of definitions and rules for how the AI can review and value data could keep the AI from working well. The AI might not understand all the content it manages, making the data management process harder to follow than anticipated.

No Real Objectives

Every internal AI or data science project will require some objective to make it work well. The internal AI should be capable of doing three things:

1. Solve an identifiable business problem

2. Create a positive impact on how a business will run

3. Reduce the risk of problems happening

An AI project that doesn’t have clear business objectives might as well be a vanity project that isn’t necessary to the business. It becomes harder for the task to work.

No Real Standards For Work

An AI project might not have a proper guiding hand ready to help keep the task afloat. The AI should have a suitable series of standards for work:

• There must be an objective to how the AI operates.

• The AI must also be inclusive of all the data it gathers. It should review each piece it finds and then assign a value or concept to what it collects.

• All actions the AI undertakes should be explainable.

• Algorithmic changes can occur, but there should also be a reason why the AI’s algorithm is changing. There must also be a pathway toward getting the changes to occur as necessary.

• Everything the AI collects should have definitions the AI can understand. Some programs can also learn to create connections between multiple definitions, especially when analyzing content that might be similar to one another.

Algorithmic changes might not always work well, plus there’s a potential for a change in management to make it harder for these alterations to work. A sponsor might leave, leading to a new party looking to create modifications that may differ from what one originally planned.

A suitable framework may also help manage the task and make it easier to follow. A poorly-managed framework may make it harder for the AI to operate due to there being no rules for it to handle. The AI may take whatever data it gathers and use it to make random decisions, or it might make incorrect assumptions about certain things it collects. The risk of the AI not handling its data well enough can be dramatic and too significant in some situations.

Data Silos Are Also a Threat

Data silos may seem convenient for many businesses, as a silo helps keep data secure in many forms. But data silos are often kept separate from one another, plus it’s hard for some organizational members to get access to the content they need from some of these silos.

Data silos make it harder for groups to share information, making it harder for an AI program to review everything necessary. Since the AI won’t have access to every database when data silos work, it becomes harder for the AI to operate.

No Trust Among People Within the Business

The last reason why so many internal AI tasks fail is that there isn’t enough trust between people within the group. The AI and IT teams in a business might have different perspectives on what they want to do. They will have varying metrics that are harder to predict, and it’s not always easy for both sides to be on the same page.

Proper Preparation Is a Must

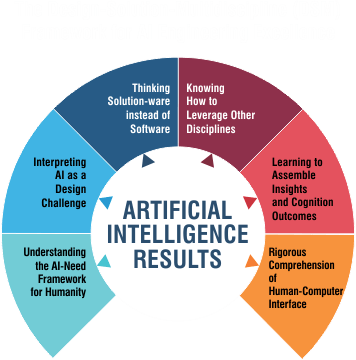

The challenge of managing an internal AI project can be daunting, but it isn’t impossible to carry out. You’ll need to look at how well your AI platform is being designed and how you’ll determine what data the AI system can read. Proper support and development from a talented team will be necessary for its success. You can plan your AI work around what you feel is right for operation, but make sure you put enough time into figuring out what will work.

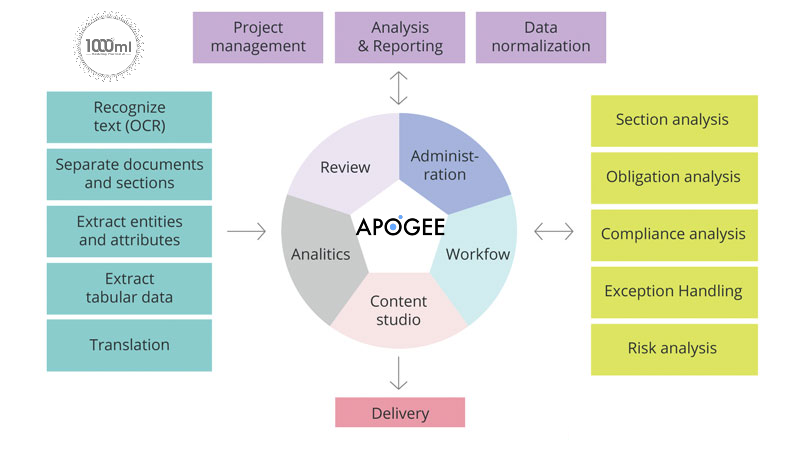

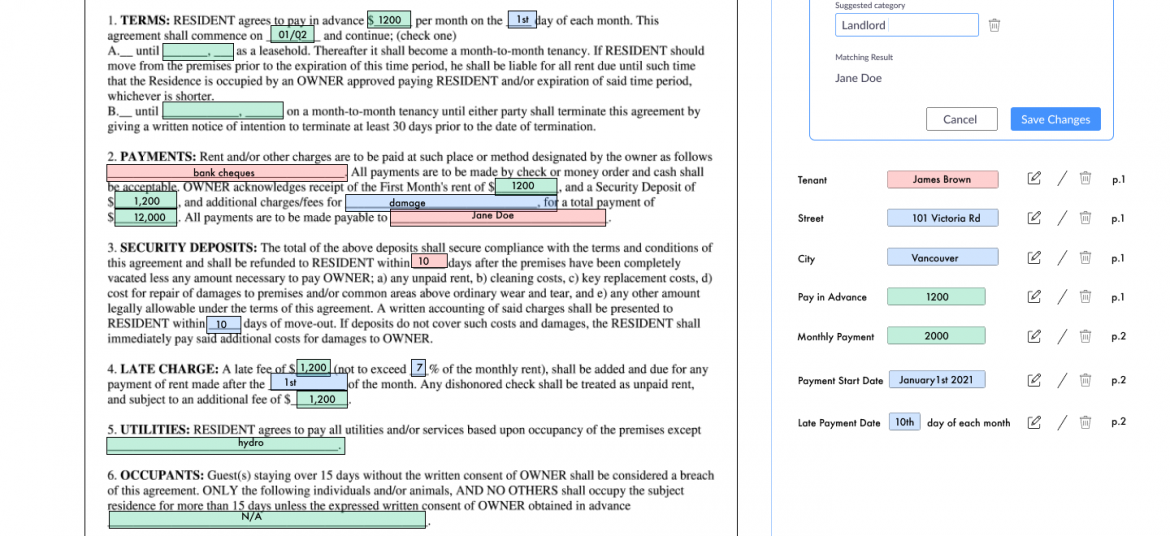

Apogee Suite of NLP and AI tools made by 1000ml has helped Small and Medium Businesses in several industries, large Enterprises and Government Ministries gain an understanding of the Intelligence that exists within their documents, contracts, and generally, any content.

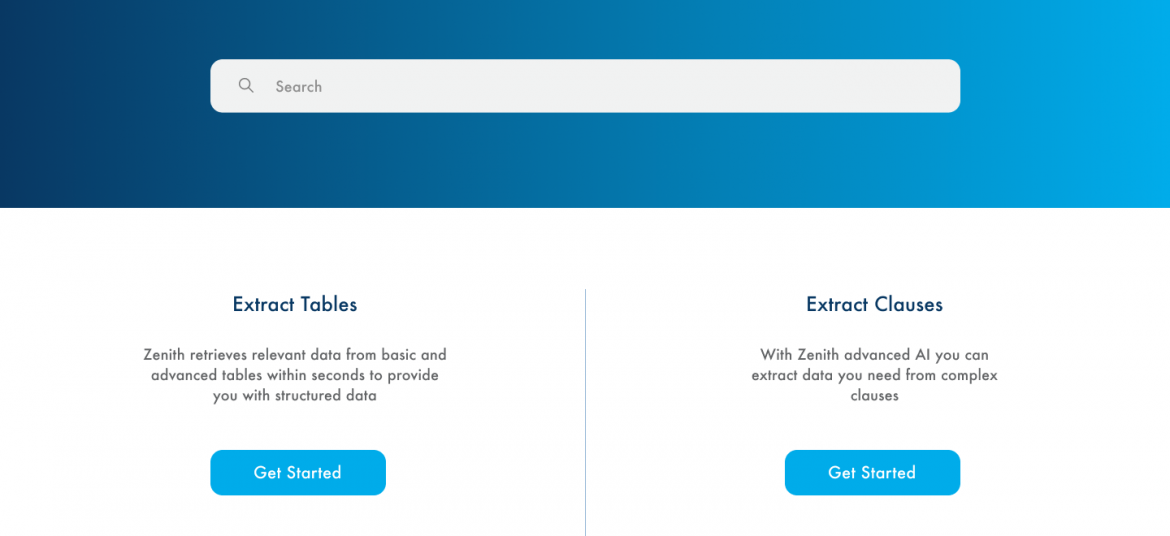

Our toolset – Apogee, Zenith and Mensa work together to allow for:

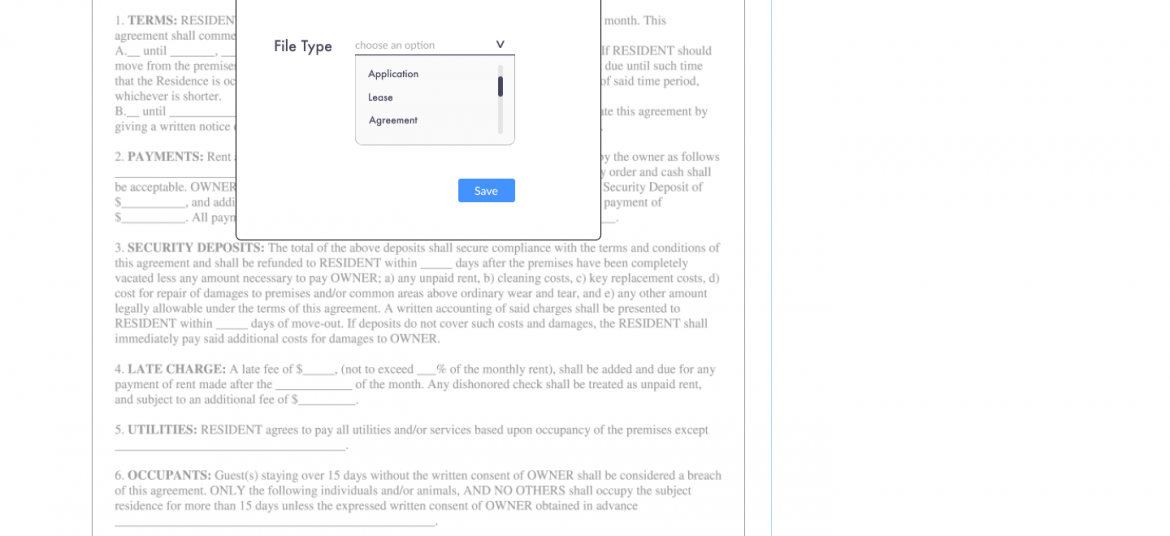

- Any document, contract and/or content ingested and understood

- Document (Type) Classification

- Content Summarization

- Metadata (or text) Extraction

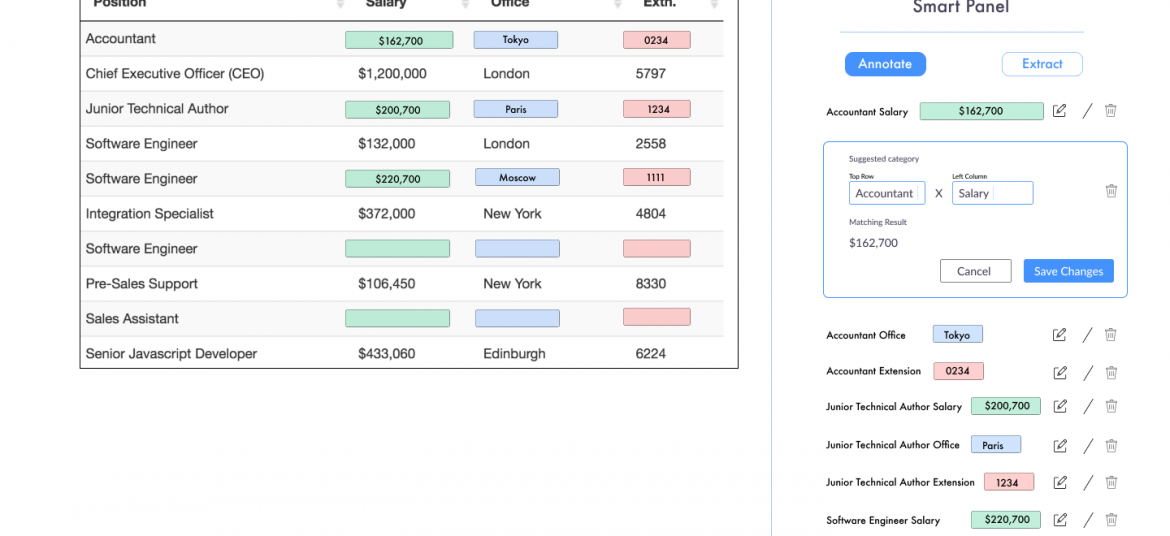

- Table (and embedded text) Extraction

- Conversational AI (chatbot)

Search, Javascript SDK and API

- Document Intelligence

- Intelligent Document Processing

- ERP NLP Data Augmentation

- Judicial Case Prediction Engine

- Digital Navigation AI

- No-configuration FAQ Bots

- and many more

Check out our next webinar dates below to find out how 1000ml’s tool works with your organization’s systems to create opportunities for Robotic Process Automation (RPA) and automatic, self-learning data pipelines.